How AI Video Translation Works in 2025

A practical guide to lip-sync, voice cloning, and multilingual localization

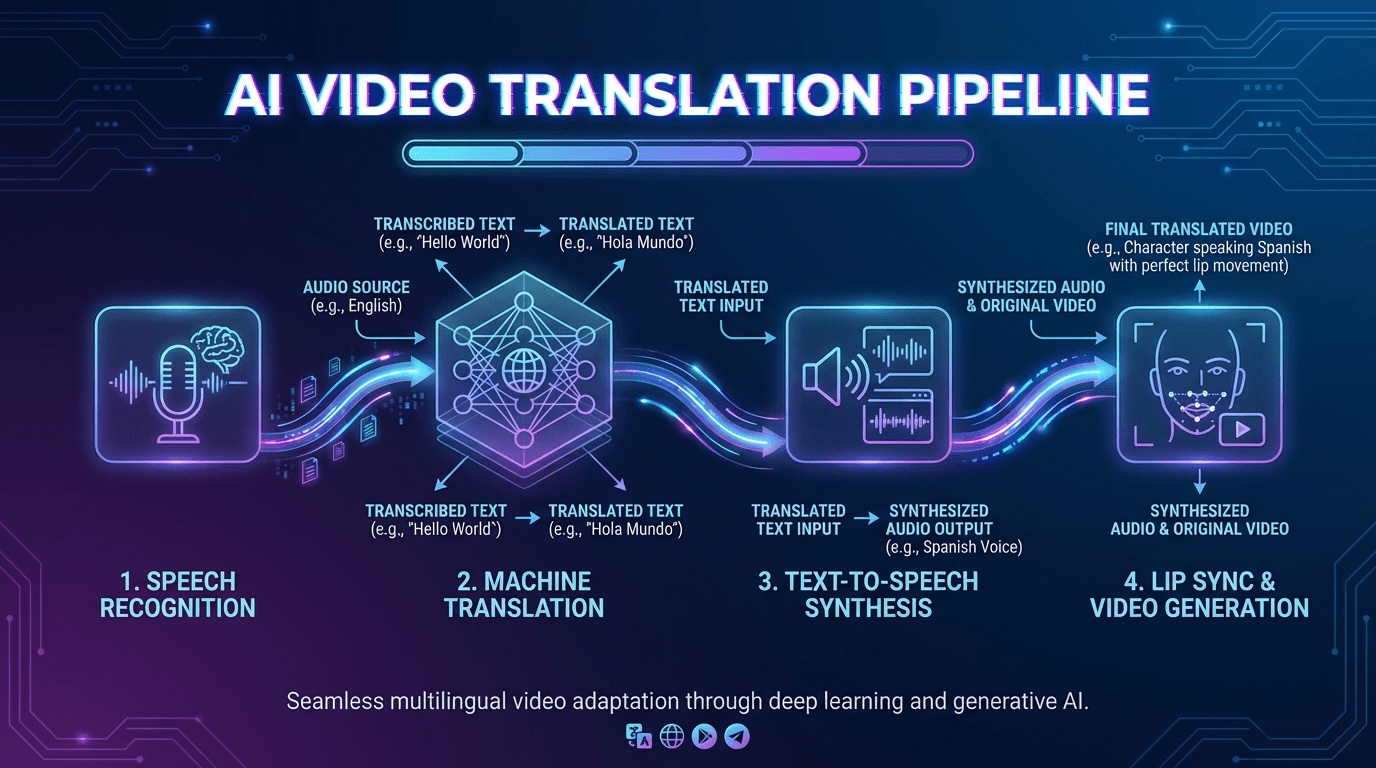

TL;DR: AI video translation combines automatic speech recognition, machine translation, text-to-speech synthesis, and lip-sync technology to convert videos into multiple languages while preserving the original speaker's voice and facial movements. Modern tools achieve 95-99% accuracy and support 70-175+ languages, making global video localization accessible in minutes rather than weeks.

Breaking into global markets used to mean hiring voice actors, coordinating studio sessions, and spending weeks on post-production. AI video translation changes that equation entirely. Today, creators and brands can localize a single video into dozens of languages—complete with natural-sounding voiceovers and synchronized lip movements—in the time it takes to grab coffee.

This guide breaks down exactly how AI video translation works, what features matter most, and how to choose the right approach for your content. Whether you're an e-commerce seller targeting new markets or a content creator expanding your audience, understanding this technology helps you make informed decisions about your localization strategy.

How AI Video Translation Works

AI video translation operates through a four-stage pipeline that processes your original video and outputs a localized version. Understanding each stage helps you optimize your source content and set realistic expectations for output quality.

Stage 1: Automatic Speech Recognition

The process starts with ASR (Automatic Speech Recognition), which converts spoken audio into text. Modern systems can distinguish between multiple speakers, filter out background music, and handle various accents. Accuracy at this stage typically reaches 95% or higher for clear audio in supported languages.

Stage 2: Machine Translation

Once transcribed, the text passes through neural machine translation. Unlike older rule-based systems, neural translation considers context across entire sentences and paragraphs. This produces more natural phrasing, though cultural nuances may still require human review for critical content.

Stage 3: Text-to-Speech Synthesis

The translated text converts back to audio using AI voice synthesis. Here's where voice cloning enters the picture—advanced systems analyze your original voice characteristics and replicate them in the target language. The result sounds like you speaking the new language, not a generic AI voice.

Stage 4: Lip-Sync Integration

The final stage adjusts the speaker's mouth movements to match the new audio track. This visual synchronization makes the translated video appear natural rather than dubbed. Quality varies significantly between tools, making this feature a key differentiator when comparing video translation and dubbing platforms.

Lip-Sync and Voice Cloning Explained

Two technologies separate basic subtitle translation from professional-grade localization: lip-sync and voice cloning. Both have matured significantly in 2025, moving from experimental features to production-ready capabilities.

How Voice Cloning Preserves Authenticity

Voice cloning analyzes characteristics like pitch, timbre, speaking pace, and emotional inflection from your original audio. With as little as 60 seconds of clear speech, AI can generate a voice model that maintains your unique delivery across languages.

According to industry benchmarks, leading platforms now support voice cloning in 29-130+ languages while preserving emotional authenticity. This matters particularly for creators whose personality drives engagement—your translated videos retain the energy and character that built your audience.

Platforms with strong avatar and voice cloning capabilities can handle up to 178 dialects, covering regional variations that generic translation misses.

What Makes Lip-Sync Work

Lip-sync technology modifies video frames to align mouth movements with translated audio. For optimal results, source footage should feature:

- Clear, well-lit facial visibility

- Front-facing camera angles

- Minimal obstructions (microphones, hands, glasses)

- Steady framing without rapid cuts

Tip: Processing time scales with video complexity. Expect roughly 8 minutes of processing for every 1 minute of source video when lip-sync is enabled.

Current limitations include maximum video lengths of 5-10 minutes per segment and reduced accuracy when multiple speakers talk simultaneously. Planning your content structure around these constraints improves output quality.

Use Cases for Creators and Brands

AI video translation serves different needs across industries. The technology particularly benefits sectors where visual demonstration matters and where global reach directly impacts revenue.

E-Commerce and Cross-Border Selling

Product videos drive conversions, but language barriers limit their reach. Sellers on Amazon, Shopify, eBay, and Etsy can translate product demonstrations into target market languages without reshooting. Combined with AI product video creation, this enables rapid catalog expansion across regions.

Key benefits for e-commerce include:

- URL-to-video conversion for existing product listings

- Batch generation for large catalogs

- Platform-optimized formatting for marketplaces

Content Creators and Social Media

YouTubers, TikTok creators, and podcasters can multiply their audience without multiplying their production effort. A single video translated into 10 languages reaches 10x the potential viewers while maintaining consistent branding and messaging.

Corporate Training and Education

HR teams creating onboarding materials, training departments developing compliance content, and educational institutions producing courses all benefit from multilingual localization. Consistency across languages ensures every employee or student receives identical information regardless of location.

Advertising and Marketing Teams

Campaign localization no longer requires separate production budgets per market. A single hero video can deploy across regions with localized voiceover, subtitles, and culturally adapted messaging.

Choosing the Right AI Video Translation Tool

Not all platforms deliver equal results. Evaluating options against your specific needs prevents costly trial-and-error.

Language Coverage

Support ranges from 29 languages on basic platforms to 175+ on comprehensive solutions. Match coverage to your target markets. More languages aren't better if they don't include your priority regions.

Accuracy Levels

Premium solutions deliver 95-99% translation accuracy. For business-critical content, look for platforms offering human review options. The 1-5% error rate on automated translation may be acceptable for social content but problematic for legal or medical materials.

Voice and Lip-Sync Quality

Request samples in your target languages before committing. Quality varies significantly between language pairs—a tool excellent for English-to-Spanish may produce poor results for English-to-Japanese.

Workflow Integration

Consider how the tool fits your production process:

- Does it accept direct URL imports from your platforms?

- Can you batch process multiple videos?

- What export formats and resolutions are supported?

- Does it integrate with your existing editing software?

Processing Speed and Limits

Understand time requirements and length restrictions. Some platforms cap videos at 5 minutes; others handle feature-length content. Processing times range from near-instant for subtitles only to several minutes per minute of source video for full lip-sync.

Step-by-Step Translation Process

Translating a video follows a consistent workflow regardless of which tool you choose. Here's the practical sequence:

-

Prepare your source video. Use clear audio, good lighting, and stable framing. Remove background music if possible—it can interfere with speech recognition.

-

Upload to your chosen platform. Most accept MP4, MOV, and direct imports from YouTube or Vimeo.

-

Select target languages. Choose based on your audience data, not just available options.

-

Configure output settings. Decide between subtitles only, dubbed audio, or full lip-sync. Each level adds processing time and cost.

-

Review automated output. Check the translation against your intent, especially for brand-specific terminology or cultural references.

-

Edit as needed. Most platforms provide transcript editors for adjusting translations before final rendering.

-

Export and distribute. Download files or publish directly to platforms via integrations.

Frequently Asked Questions

How accurate is AI video translation compared to human translators?

Modern AI achieves 95-99% accuracy for common language pairs with clear audio. Human translators still excel at cultural nuance, idioms, and specialized terminology. For maximum precision, many platforms offer human review as an add-on service.

Can AI translate videos with multiple speakers?

Yes, but accuracy depends on audio clarity. Overlapping dialogue reduces recognition accuracy significantly. Videos with distinct speaker turns and clear audio produce the best results.

What video formats work with AI translation tools?

Most platforms support MP4, MOV, WAV, and MP3 files. Many also accept direct imports from YouTube, Vimeo, and social platforms. Output typically delivers in MP4 or as separate subtitle files (SRT, VTT).

How long does AI video translation take?

Subtitle-only translation completes in minutes. Full lip-sync processing requires approximately 8 minutes per minute of source video. Batch processing larger catalogs may take hours but runs automatically once initiated.

Is my video data secure during translation?

Reputable platforms process videos in isolated environments without using customer data for model training. Review privacy policies specifically, especially for sensitive corporate or unreleased content.

Conclusion

AI video translation has matured from experimental technology to a practical production tool. The combination of speech recognition, neural translation, voice cloning, and lip-sync delivers localized content that genuinely resonates with global audiences.

For creators and brands serious about international expansion, the question isn't whether to use AI translation—it's how to integrate it effectively into your workflow. Start with your highest-performing content, test outputs in priority markets, and scale based on results.

The tools exist to reach 70+ languages with professional-quality localization. The competitive advantage goes to those who act on it first.