A/B Testing Video Creatives with AI: Faster Wins for Marketers

AI-powered A/B testing transforms how marketers optimize video creatives—generating variants in seconds, predicting winners with 90% accuracy, and cutting testing time by 2.5x.

TL;DR: AI-powered A/B testing lets marketers generate and test video ad variants in minutes instead of weeks. With creative scoring AI predicting performance at 90%+ accuracy and tools enabling batch generation of 100+ variants, marketers now achieve 2x higher CTRs and up to 50% ROAS improvement while cutting testing time by 2.5x.

Video ads drive the highest ROI in digital marketing, but creating winning creatives has traditionally been a slow, expensive guessing game. You film multiple versions, wait weeks for statistical significance, and hope your instincts were right. Now, AI ad creative tools are transforming this process entirely. Marketers can generate dozens of video variants in seconds, predict which will perform best before spending a dollar, and optimize campaigns in real-time.

This guide breaks down exactly how to use AI for A/B testing video creatives—from setting up your first test to scaling winning ads across platforms and languages.

Why A/B Testing Video Creatives Matters

Traditional video ad testing is fundamentally broken. You invest thousands in production, launch a campaign, and wait 2-4 weeks to learn what works. By then, you've burned budget on underperforming creatives and missed conversion opportunities.

The numbers tell the story: according to Simulmedia research, people seeing an ad 6-10 times become 4.1% less likely to buy compared to those who see it 2-5 times. Ad fatigue is real, and it kills ROAS fast.

The Cost of Slow Testing

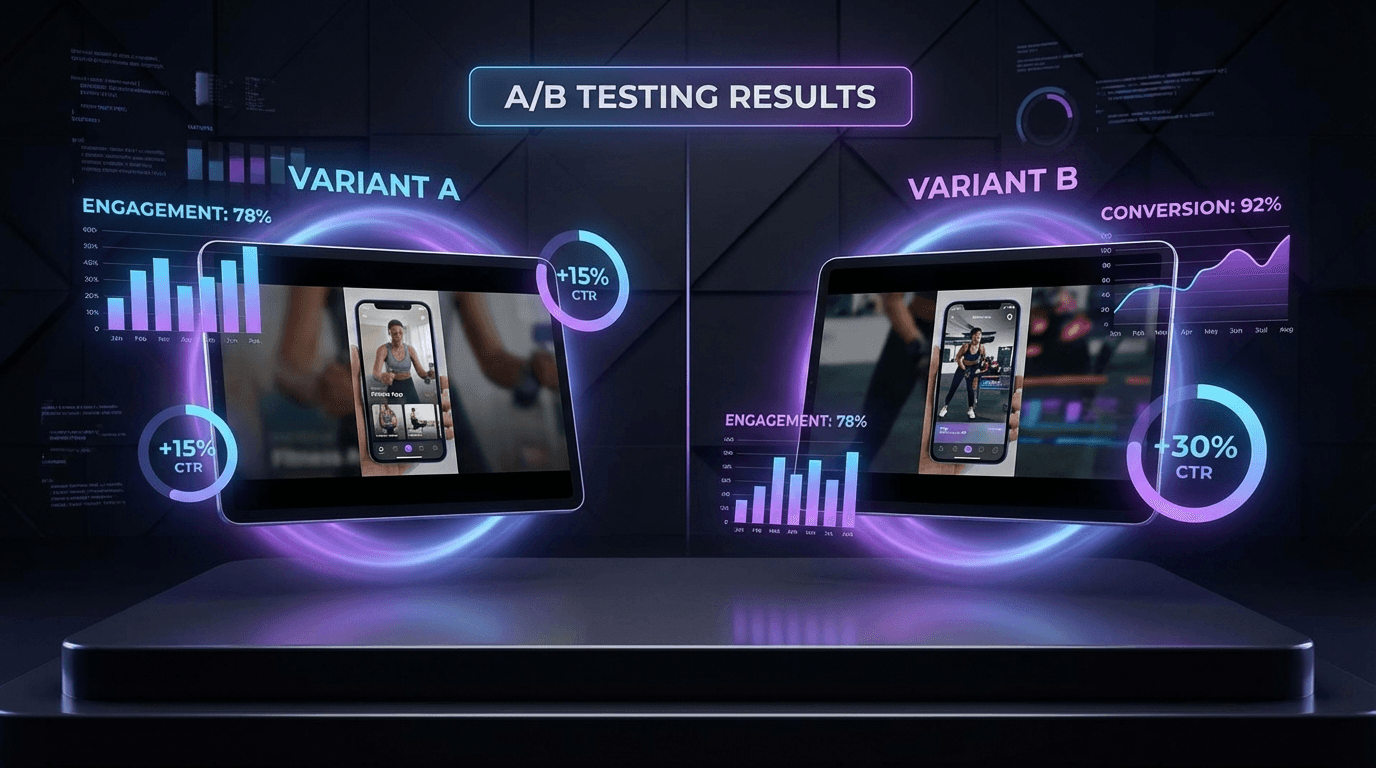

Every day you run a suboptimal creative, you lose money. A single winning video variant can lift CTR by 15-30%, which means your testing speed directly impacts your bottom line. E-commerce brands that test product-focused thumbnails against lifestyle shots often see CPAs drop by 12-18% once they scale the right creative.

What Makes Video Testing Different

Video testing requires evaluating multiple variables simultaneously: hooks, thumbnails, pacing, calls-to-action, actors, voiceovers, and music. Testing these one at a time using traditional methods could take months. AI changes this equation entirely.

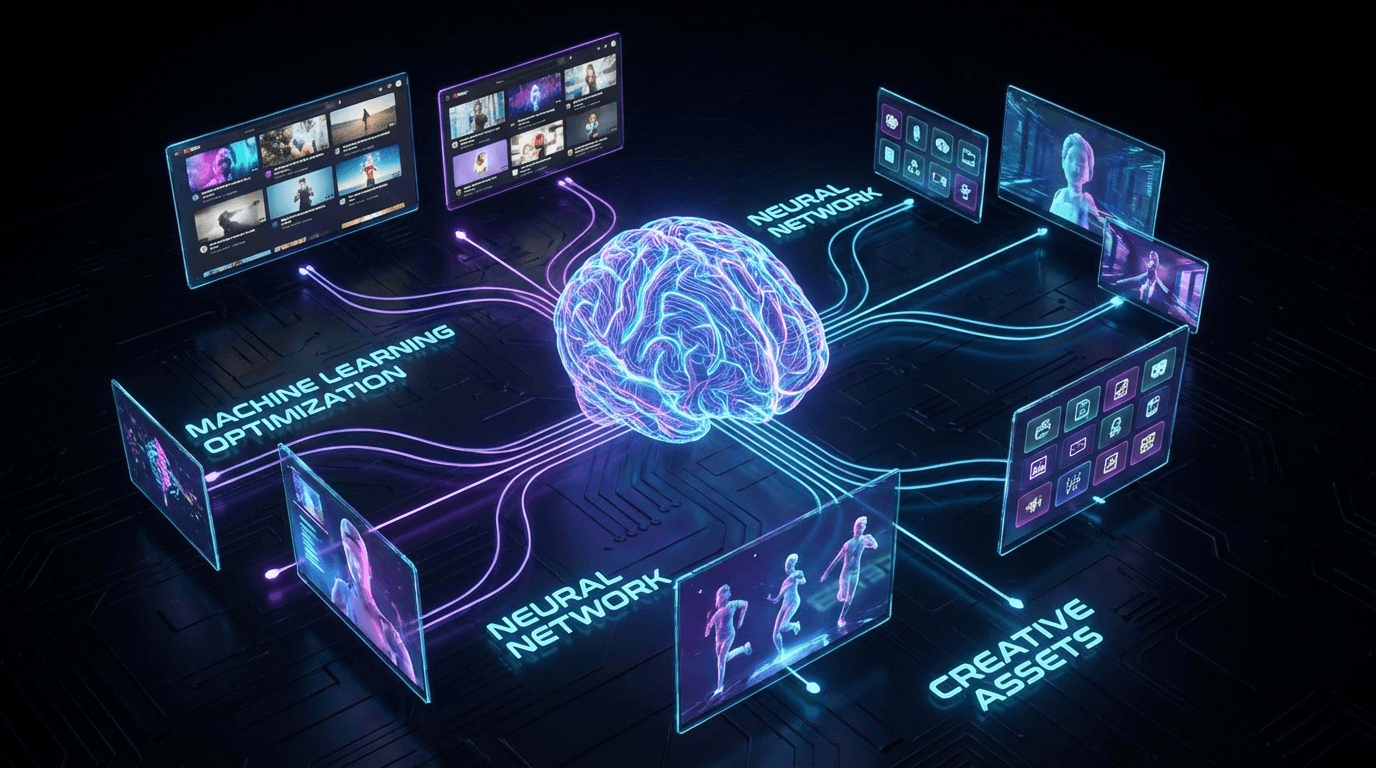

How AI Transforms Video Creative Testing

AI ad creative tools fundamentally change three aspects of video testing: speed, scale, and prediction accuracy.

Speed: From Weeks to Minutes

Traditional creative testing required weeks of production and data collection. AI generates video variants in minutes. Instead of filming multiple versions, you input your content—a product URL, script, or existing footage—and AI produces dozens of variations with different hooks, avatars, and visual treatments.

AI video ad creation tools now handle the entire pipeline: script generation, avatar selection, voiceover, and final rendering. What took a production team days now happens in the time it takes to grab coffee.

Scale: 100+ Variants Without 100x the Cost

The Häagen-Dazs case study from AdCreative.ai demonstrates what's possible: 150+ creatives generated per product, resulting in a $1.70 CPM reduction and over 11,000 clicks in a single month. This level of creative volume was previously impossible without massive production budgets.

Prediction: 90%+ Accuracy Before Launch

Creative Scoring AI analyzes your ad variants before you spend money. According to AdCreative.ai, their model predicts ad performance with over 90% accuracy. This means you can filter out losers before launch and focus budget on predicted winners.

Scaling Video Variants with AI

The real power of AI testing emerges when you scale variant creation systematically. Here's how to build a testing matrix that compounds performance gains.

Building Your Creative Matrix

Advanced teams structure testing as a matrix: Hook × Visual × CTA × Actor. Instead of testing individual elements, you validate winning combinations. This layered approach reduces creative fatigue, builds a scalable asset library, and creates durable performance gains.

With AI avatars and voice cloning, you can test the same script with different presenters instantly. Create versions with male and female voices, different accents, or varying emotional tones—all from a single input.

Batch Generation for E-Commerce

For cross-border sellers on Amazon, Shopify, or eBay, AI enables one-click URL-to-video conversion. Input your product listing, and AI generates multiple video variants with different angles: feature-focused, benefit-driven, problem-solution, or testimonial-style.

Tip: Start with 5-10 variants per product, test for 7-14 days to reach statistical significance, then scale the top 2-3 performers across platforms.

Multivariate Testing at Scale

While basic A/B tests compare two variants, AI enables true multivariate testing. Google Ads supports testing up to 10 variants simultaneously. The AI identifies not just which creative wins, but which specific elements—opening hook, CTA placement, video length—drive performance.

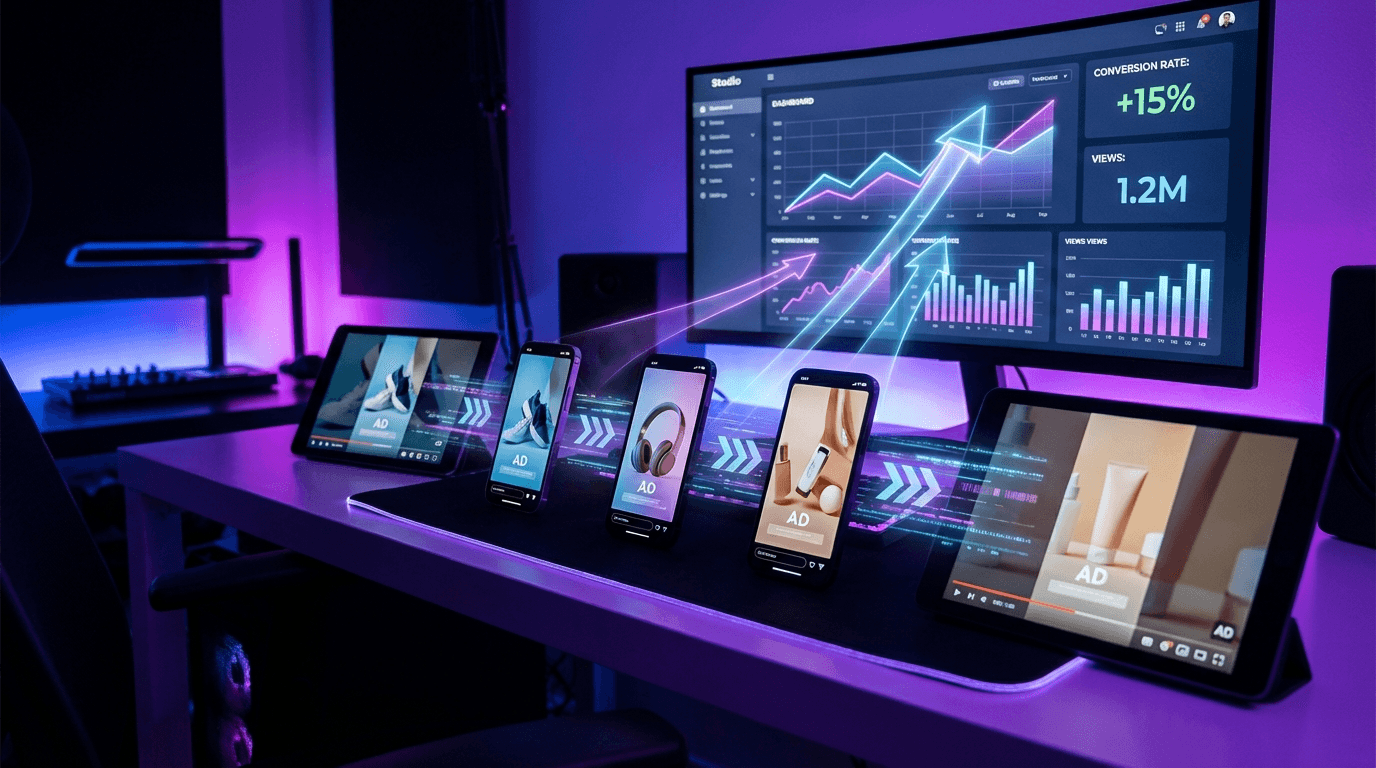

Cross-Platform Video Testing Strategies

Video ads perform differently across platforms. A winning TikTok creative may fail on YouTube. AI tools help you optimize for each platform's unique requirements.

Platform-Specific Optimization

Each platform has distinct best practices:

- TikTok/Reels: Vertical format, hook in first 3 seconds, native-feeling content

- YouTube: Horizontal format, longer watch times, clear branding

- Meta/Facebook: Square or vertical, captions essential, emotional hooks

AI enables batch generation of platform-optimized variants from a single source creative. Video translation and dubbing capabilities extend this further—test the same creative across 70+ languages with lip-sync dubbing.

Budget Allocation Strategy

Start with 20% of your ad budget on testing, 80% on scaling winners. Run initial tests with smaller audiences to reach statistical significance faster. Once you identify top performers (95% confidence level), shift budget aggressively.

Google recommends running video experiments for a minimum of 7 days, with most tests achieving statistical significance within 30 days.

Best Practices for AI-Powered Video Testing

Successful AI creative testing requires systematic methodology. Here's what top performers do differently.

Start with Clear Hypotheses

Before generating variants, define what you're testing. "Will a problem-solution hook outperform a feature-focused hook?" beats "Let's try some new creatives." Clear hypotheses lead to actionable insights.

Use market trend analysis to identify what competitors are testing and find gaps in the market.

Isolate Variables When Possible

While AI enables multivariate testing, simpler tests produce clearer insights. Test one major element at a time when you're learning what works for your audience. Save complex multivariate tests for optimization once you've established baseline performance.

Document and Build Your Creative Library

Every test generates learnings. Track which hooks, CTAs, and visual treatments work for each audience segment. Over time, you build a library of proven creative elements that compound into consistent performance.

Avoid Common Mistakes

- Don't declare winners too early—wait for 95% statistical confidence

- Don't test too many variants simultaneously with small budgets

- Don't ignore audience segmentation—a winning creative for one segment may fail for another

Frequently Asked Questions

How long should I run an AI video ad A/B test?

Most platforms recommend a minimum of 7 days, with optimal results achieved between 14-30 days. Statistical significance requires enough impressions for the conversion rate difference to maintain an error rate below 5%. Start with broader audiences to accelerate data collection.

Can AI predict video ad performance before I spend money?

Yes. Creative Scoring AI tools analyze visual elements, copy, and historical performance data to predict results with over 90% accuracy according to AdCreative.ai. While not perfect, this pre-launch filtering significantly reduces wasted ad spend on underperforming variants.

How many video variants should I test at once?

For most budgets, 3-5 variants provide meaningful data without spreading budget too thin. Enterprise advertisers with larger budgets can test 10+ variants using platforms like Google Ads' Custom experiments. Start smaller and scale as you learn what works.

What metrics matter most for video ad testing?

Focus on metrics tied to business outcomes: CTR for awareness, view-through rate for engagement, and conversion rate for direct response. Avoid optimizing for vanity metrics like video views unless they directly correlate with your goals.

Conclusion

AI has fundamentally changed video creative testing for marketers. What once required weeks of production and months of testing now happens in days. The numbers are compelling: 2x higher CTRs, up to 50% ROAS improvement, and 2.5x faster testing cycles.

The key is starting systematically. Begin with clear hypotheses, generate variants at scale, use predictive scoring to filter before launch, and document learnings to build compounding advantages.

For e-commerce sellers and marketing teams ready to accelerate their video ad performance, AI-powered creative testing isn't optional—it's the new baseline for competitive performance.