AI Lip Sync Explained: How Video Localization Stays Natural

AI lip sync technology matches mouth movements to translated audio, enabling natural-looking multilingual videos without reshoots. Learn how it works and why it matters for global content.

TL;DR: AI lip sync technology automatically matches mouth movements to translated audio, making dubbed videos look natural across any language. This enables businesses to localize video content for global audiences without expensive reshoots or manual animation work.

Creating video content for international markets used to mean one of two options: expensive reshoots with native speakers or awkward voice-overs that never quite match the speaker's lips. Neither option scales well for businesses targeting multiple regions.

AI lip sync changes this equation entirely. The technology analyzes facial movements frame by frame, then adjusts mouth shapes to match translated audio naturally. The result? A single video can speak dozens of languages while maintaining the authentic feel of the original recording.

For e-commerce sellers on Amazon, Shopify, or TikTok Shop, this means product demos that connect with customers in their native language. For training departments, it means onboarding materials that work across global offices. The technology has moved from experimental to production-ready, and understanding how it works helps you leverage it effectively.

How AI Lip Sync Technology Works

The core process behind AI lip sync combines computer vision with deep learning to create seamless results. Understanding these mechanics helps you set realistic expectations and optimize your content for the best outcomes.

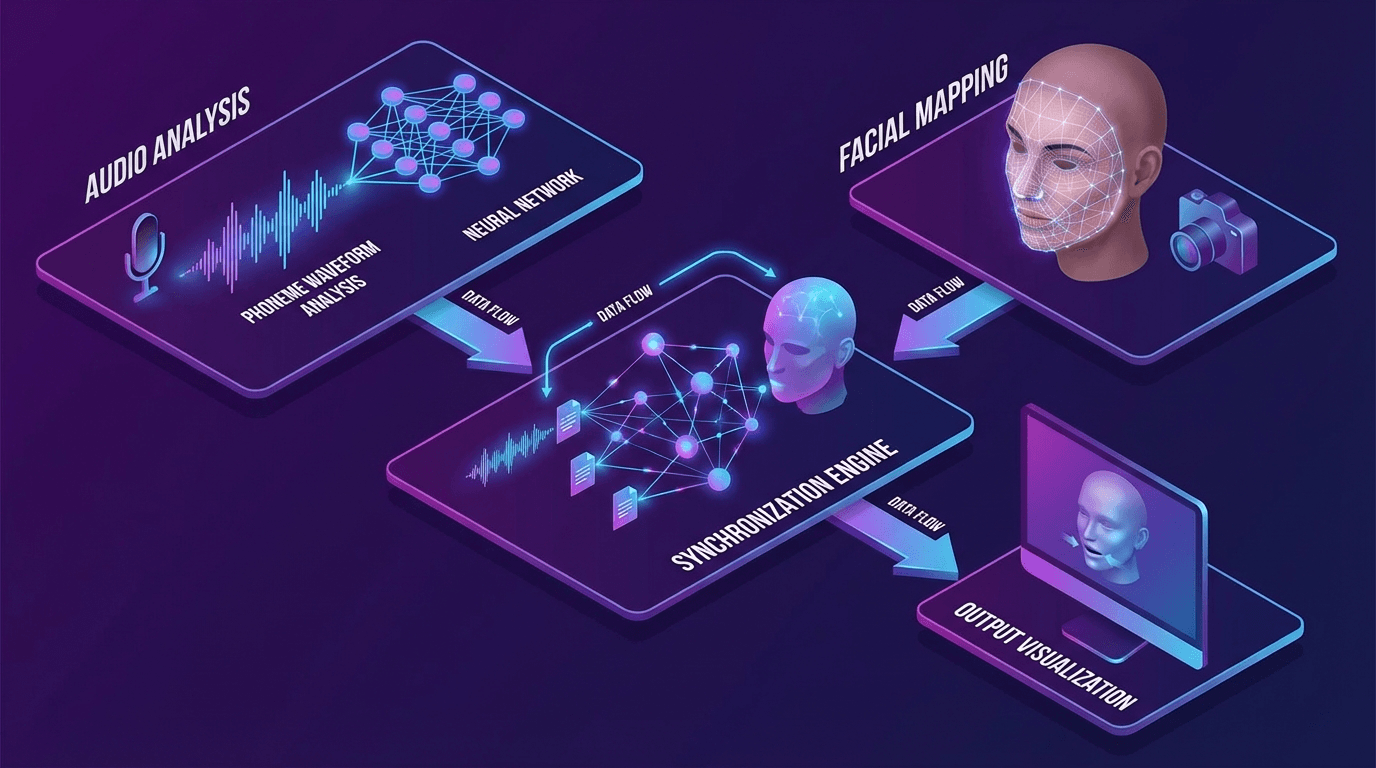

Facial Detection and Mapping

The AI first identifies key facial landmarks in your video: lips, jaw, cheeks, and surrounding areas. It creates a detailed mesh of tracking points that follow every micro-movement. This mapping happens frame by frame, typically processing 24-30 frames per second for standard video.

Modern systems track not just the mouth but also supporting facial movements. When you speak, your cheeks flex, your jaw shifts, and subtle expressions accompany your words. Quality AI avatar and voice cloning systems capture all these elements to maintain natural appearance.

Audio Analysis and Phoneme Mapping

Simultaneously, the system breaks down the target audio into phonemes—the distinct sounds that make up speech. Each phoneme corresponds to a specific mouth shape. The sound "oh" requires rounded lips, while "ee" stretches them horizontally.

The AI maintains a library of these phoneme-to-mouth-shape mappings, trained on thousands of hours of video data. It learns the timing relationships between sounds and movements, including the transitions between different mouth positions.

Synchronization and Rendering

The final step merges the facial mapping with the audio analysis. The AI calculates exactly when and how to modify each frame so mouth movements match the new audio track. This includes:

- Adjusting lip positions to match phoneme timing

- Blending transitions smoothly between mouth shapes

- Preserving natural head movements and expressions

- Maintaining consistent lighting and skin texture

The rendering process applies these modifications while keeping everything else in the frame untouched. Done well, viewers cannot distinguish AI-adjusted footage from original recordings.

AI Lip Sync vs Traditional Dubbing

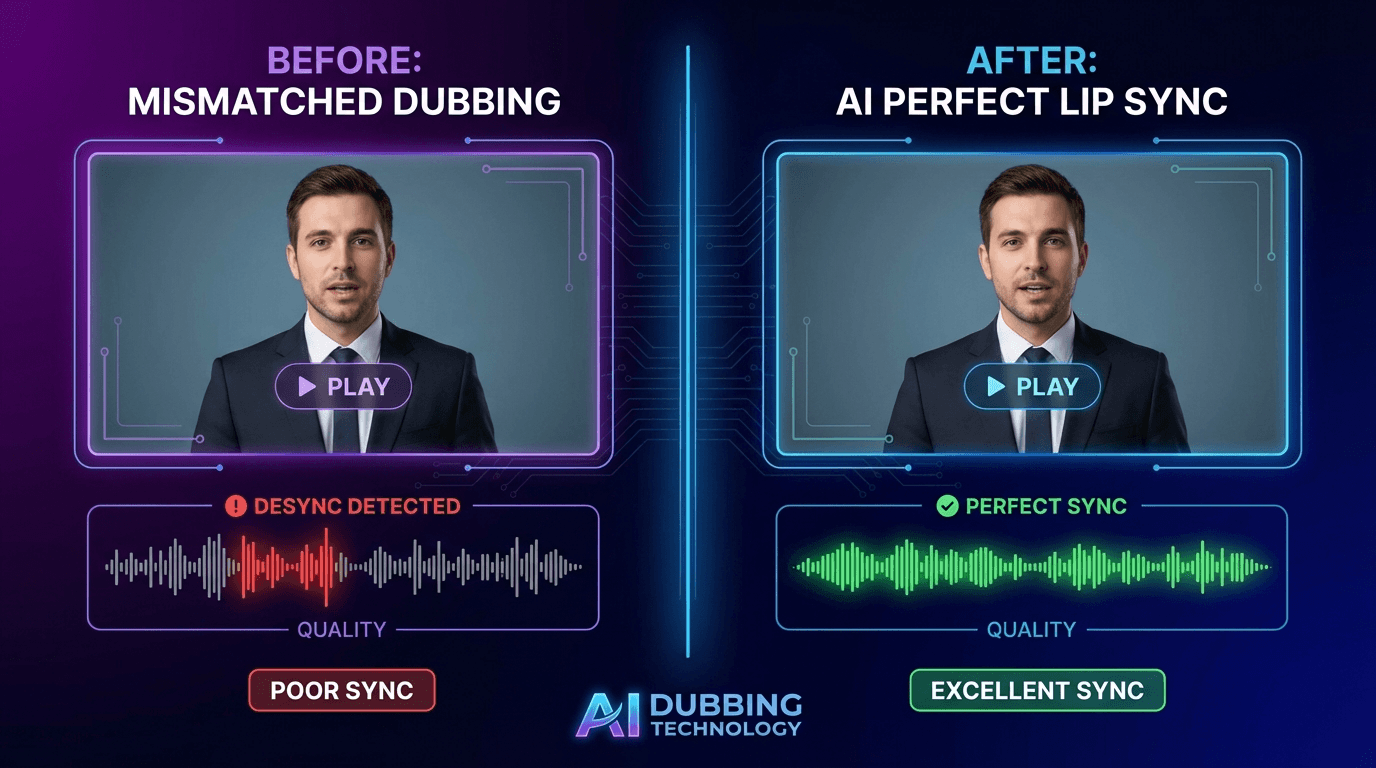

Traditional dubbing has served the film and media industry for decades, but the gap between old methods and AI-powered solutions grows wider each year.

The Voice-Over Problem

Standard voice-over simply layers translated audio over the original video. The speaker's lips move in one language while you hear another. This disconnect distracts viewers and reduces engagement. Research consistently shows audiences prefer content where visual and audio cues align.

Traditional lip sync dubbing attempts to solve this by hiring translators who craft scripts matching the original mouth movements. This artisan approach works for major film releases with substantial budgets but falls apart for business video at scale.

Cost and Speed Comparison

Manual dubbing for a single video minute can cost $1,200 or more when you factor in voice talent, translation, studio time, and post-production. AI lip sync alternatives typically reduce these costs by 70-90%, according to industry providers.

Speed differences prove equally dramatic. Traditional dubbing requires weeks of coordination between translators, voice actors, and editors. AI systems process the same content in minutes, enabling same-day localization for time-sensitive campaigns.

Quality Considerations

Early AI lip sync tools produced uncanny results—close enough to notice something was off, which proved worse than obvious dubbing. Current systems have largely solved this problem for typical business video scenarios.

The technology performs best with forward-facing speakers, clear lighting, and minimal face obstruction. Extreme angles, heavy makeup, or unusual facial features can still challenge some systems. Understanding these limitations helps you plan content that maximizes AI processing quality.

Real-World Applications for Video Localization

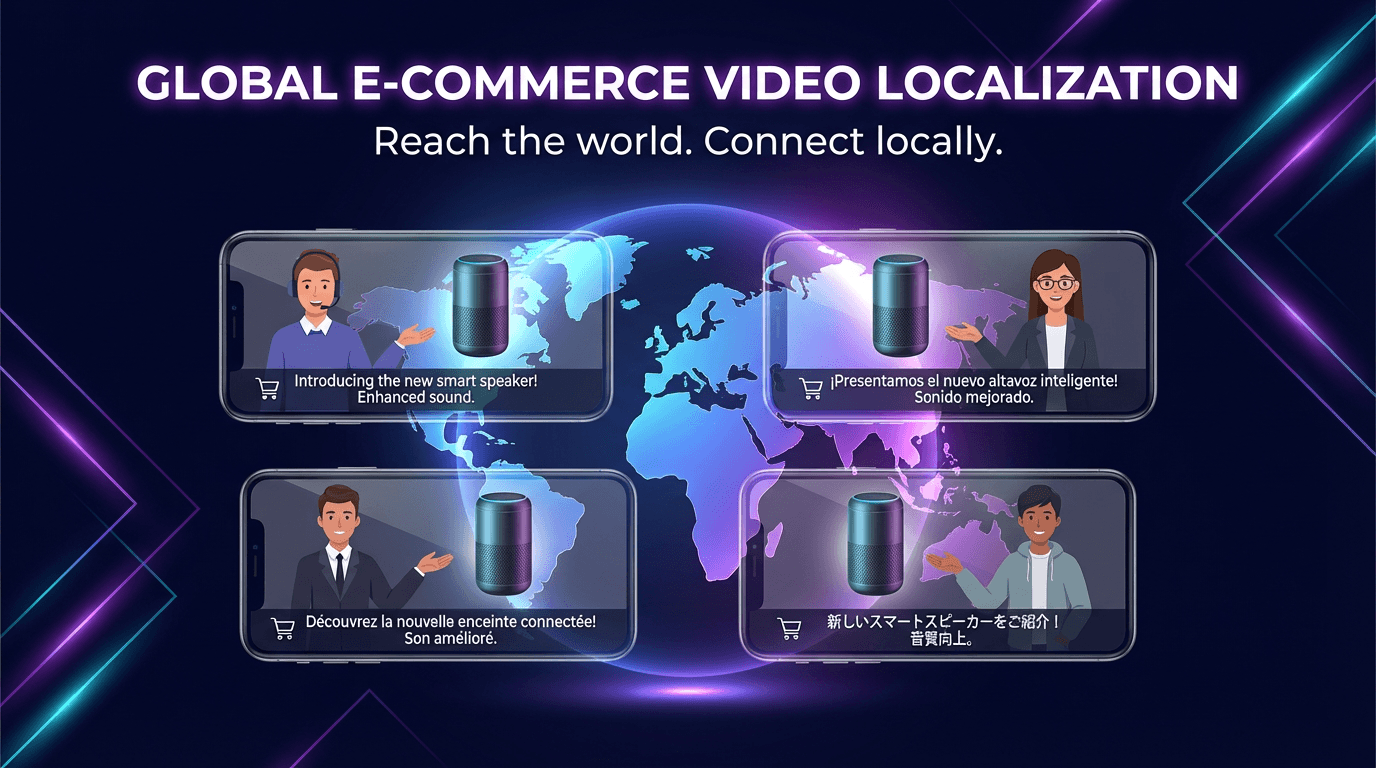

AI lip sync unlocks practical use cases across industries. The common thread: turning single-language content into multilingual assets without proportional cost increases.

E-Commerce Product Videos

Cross-border sellers face a fundamental challenge: product videos that convert in one market often fail in others due to language barriers. A compelling product demo in English loses impact when shown to Spanish-speaking customers with subtitles.

Video translation and dubbing with lip sync solves this by creating native-feeling versions for each target market. Amazon sellers can produce one product walkthrough, then generate localized versions for Germany, Japan, France, and any other marketplace they target.

The efficiency gains compound when you consider AI product video creation workflows. Generate the base video once, then localize it across 70+ languages without additional filming.

Corporate Training and Onboarding

Global enterprises spend heavily on training content that often exists only in headquarters languages. AI lip sync enables rapid localization of onboarding materials, compliance training, and skill development videos.

The technology particularly shines for talking-head content where a trainer or executive delivers information directly to camera. These formats benefit most from natural lip sync because viewers focus on the speaker's face throughout.

Social Media Marketing

Platform algorithms favor content that keeps viewers engaged. Videos with mismatched audio and visuals lose viewers faster than naturally synchronized content. For brands running multilingual TikTok or Instagram campaigns, AI lip sync maintains engagement metrics across language versions.

The speed advantage matters here as well. Trending content has a short window of relevance. AI localization enables same-day deployment across markets, capturing trend momentum before it fades.

Best Practices for Natural Results

Getting optimal output from AI lip sync requires attention to input quality and some understanding of what helps the technology perform best.

Recording Tips for Lip Sync Optimization

Frame your speaker from the shoulders up with their face clearly visible. Avoid harsh shadows across the mouth area, and ensure consistent lighting throughout the recording. These basics dramatically improve AI processing accuracy.

Keep facial movements natural rather than exaggerated. The AI learns from real speech patterns, so theatrical over-enunciation often produces worse results than relaxed, conversational delivery.

Tip: Record at the highest resolution your workflow supports. AI lip sync performs frame-by-frame analysis, and more pixel data means more accurate facial mapping.

Audio Quality Standards

The target audio track needs clarity for accurate phoneme detection. Background noise, heavy compression, or overlapping voices challenge the analysis phase. Clean audio with natural speech pacing produces the most convincing synchronization.

Match audio energy levels to the original recording. A whispered translation applied to footage of someone speaking enthusiastically creates cognitive dissonance even when lip sync is technically accurate.

Batch Processing Workflows

For large content libraries, establish templates and consistent recording standards. When all source videos follow the same framing and lighting guidelines, batch processing delivers consistent quality across your entire catalog.

Many platforms support bulk uploads with automated language detection and processing. This enables minute-level production for high-volume localization needs.

Frequently Asked Questions

How accurate is AI lip sync compared to manual dubbing?

Modern AI lip sync achieves accuracy levels that most viewers cannot distinguish from original recordings in standard business video contexts. The technology works best with front-facing speakers and clear lighting. Complex scenarios like extreme camera angles or multiple overlapping speakers may still benefit from manual refinement.

What languages does AI lip sync support?

Leading platforms support 70-140+ languages, including major global languages and regional dialects. The technology works across language families, handling transitions between languages with different speech rhythms and phoneme structures. Support for less common languages continues expanding.

Can AI lip sync handle multiple speakers in one video?

Yes, advanced systems support multi-speaker scenarios by tracking and processing each face independently. Panel discussions, interviews, and dialogue scenes can be localized with each speaker receiving appropriate lip sync treatment. Some platforms support up to six simultaneous speakers.

Does AI lip sync work with animated content?

Support varies by platform. Most AI lip sync tools are optimized for human faces and may produce inconsistent results with animated characters, stylized illustrations, or non-human subjects. Some specialized tools exist for animation-specific lip sync workflows.

Conclusion

AI lip sync has matured from experimental technology to essential business tool for anyone creating multilingual video content. The combination of natural-looking results, dramatic cost reduction, and rapid processing speed makes localization accessible at scales previously impossible.

The technology works best when you understand its requirements: clear facial visibility, quality audio, and realistic expectations about edge cases. With these foundations in place, a single video recording can speak to audiences worldwide in their native languages.

For e-commerce sellers, marketing teams, and training departments ready to expand their video reach across language barriers, AI lip sync removes the traditional trade-off between quality and scale.